Augmented Reality (AR) is no more a trend but an important feature for any mobile application. With AR Kit and Reality Kit at their disposal, developers can create highly engaging and immersive applications on iOS in gaming, education, retail, industrial, and many other areas.

In this blog, we will have an overview on AR in IOS, starting from the foundations, framework, features, use cases, and challenges to trends in the future and how a development partner like HexaCoder can help brands in making compelling AR iOS apps.

The Foundations of AR on iOS

What Is AR on iOS?

AR in iOS refers to the blending of virtual 3D content into the camera view of the real world, aligned with real-world surfaces and contexts in real time. It is, however, not pure VR (which replaces the environment) but augmentation — overlaying graphics, animations or interactive models onto what the user already sees.

Because Apple controls both hardware and software, iOS AR has the advantage of sensors (camera, motion, depth), high-performance processors, and frameworks such as ARKit to deliver smooth and robust experiences.

Core AR Frameworks: ARKit, RealityKit, and AR Quick Look

First introduced in 2017, ARKit is Apple's primary AR framework. It resolves problems such as motion tracking, world tracking, plane detection, scene understanding, etc.

RealityKit, which serves as a building block on top of ARKit, provides simpler rendering, physics, animation, and content management.

AR Quick Look allows users to place simple 3D models in augmented reality straight from Safari, Mail, Files, and other iOS apps without the user having to create a full-fledged custom AR app.

Using these frameworks enables developers to define how well the virtual and the physical worlds come together.

How ARKit Works in iOS

For AR to be realistic, it must solve 3 main challenges: tracking the device, understanding the scene, and rendering content.

Motion & World Tracking

ARKit combines visual input from the device camera with motion data coming from the gyroscope and accelerometer to detect the movement of the device through a 3D world. This technique is generally called visual inertial odometry: understanding position based on visual and inertial data.

ARKit creates a continuous update between the camera and its environment so that as the user moves in his setting, the virtual content remains accurately anchored.

Plane Detection and Scene Understanding

ARKit determines horizontal and vertical planes (floor, tabletop, walls), environmental light conditions, and the location of feature points in the surrounding. This allows virtual objects to be realistically placed on flat surfaces and adapt to various lighting.

With more recent APIs available (especially on devices equipped with LiDAR on newer iPhones/iPads), ARKit achieves a better understanding of scenes, such as depth awareness, occlusion (virtual objects hiding behind real objects), and instant placement.

Rendering and Content Integration

When the world is understood, ARKit sends position/pose data to rendering engines (RealityKit, SceneKit, Metal) that draw the virtual objects over the camera feed. Developers get to apply physics, animation, interactivity, shadows, and much more.

All this leads to immersive, interactive, believable experiences that iOS AR offers.

Key Features & Capabilities in iOS AR - Especially with ARKit 6

ARKit evolved through time with new capabilities coming along in the most recent versions.

4K HDR Video Capture

With ARKit 6, AR scenes can now be recorded at 4K resolution with HDR, permitting content creators to capture immersive AR video for social sharing, editing, or marketing.

Depth API & Instant AR

With LiDAR-equipped devices (newer iPhones & iPads), depth API gives ARKit depth information at the pixel level, enhancing occlusion, realism, and surface detection. It also supports Instant AR, enabling users to position objects instantly without having to scan the environment.

Motion Capture & People Occlusion

ARKit added the ability to perform motion capture (tracking joints of the human body) and people occlusion (letting virtual content naturally appear in front of or behind people).

Simultaneous Front & Back Camera

Now, applications can track events from the front (face) and back (world) cameras simultaneously — ideal for AR selfie effects combined with world interactions.

Scene Geometry

It is capable of creating topological maps of a room (walls, floors, objects), thereby providing better occlusion and a more realistic interaction between virtual and real elements.

These enhancements make AR on iOS more compelling and closer to real-world quality.

Use Cases and Applications of Augmented Reality on iOS

Retail and E-Commerce:

With Augmented Reality on iOS, consumers virtual test products, such as furniture and decor, in the space they occupy. In AR Quick Look, a user can preview 3D models straight from Safari or an app, which makes viewing realistic causes decreased product returns via confidence boosting.

Gaming and Entertainment:

Such games create an "interactive playground" for the user. The player can play with virtual creatures, objects, or an entire scene layered onto the physical space to enjoy the seamless blend of real-world motion with digital creativity; all leading to impressive immersion experiences in gameplay.

Education and Training:

On iOS, augmented reality offloads valuable learning by overlaying interactive 3D models, whether actual structures such as anatomical ones to historical artifacts or even engineering parts, in real environments. This immersion makes complex concepts more tangible and improves memory retention for students and professionals. The immersive method gives complex concepts form and increases memory retention for students and professionals.

Industrial Maintenance:

The AR iOS applications enable the technicians and engineers to view the instructions, diagrams, or any system data, right on the real equipment. Since the virtual schematics are overlaid, AR minimizes human interventions and downtime, thus improving the efficiency for equipment installations or troubleshooting and repair works.

Navigation and Wayfinding:

AR overlays arrows, guides, and directions over the real world camera view, thus making indoor and outdoor navigation intuitive. When this camera is walking through airports or streets in the city, users are instead following moving, live visuals but without the use of static line maps.

Social and Media:

With AR masks and effects made for iOS, it creates exciting content all throughout other platforms like Instagram and Snapchat. This is because both front and rear cameras are used in these AR experiences to enhance storytelling, self-expression, and brand engagement into fun and sharable formats.

Challenges and Considerations for iOS AR Development

Device Capability & Fragmentation:

Newer iPhones and iPads are the only ones that can run ARKit advanced features such as LiDAR and Instant AR. Hence, developers need to build scalable AR applications designed to accommodate older hardware without breaking the user experience and function.

Performance and the Battery:

Constant camera input is what uses AR applications for rendering and tracking movements in real time. All these operations make the application consume huge CPU and GPU power. Optimizing the frame rates, managing resources while keeping in check the graphics’ quality is vital for a smooth and effective running of an AR application.

Lighting and Environmental Complexity:

Though it relies heavily on the lighting and textured surfaces of the physical environment, most of the realism of AR comes from such. Plane detection proves to be difficult under dim lighting or smooth surfaces. Hence the developers had to tune some algorithms for robust tracking in various real-world conditions.

Occlusion and Realism:

If one wants to see convincing AR visuals, virtual objects have to appear convincingly behind and in front of real things. In fact, the perfect implementation of occlusion requires very accurate mapping of depth. Any particular mismatch could break immersion and cause distrust from the user in the AR-experience.

Privacy and Permissions:

Since there are camera and motion sensors used by AR applications, permission protocols are strict by Apple. Users are made aware of the purpose of data, providing security and trust, while following the App Store and compliance with privacy rules.

User Experience Design:

One effective AR app manages the load of both function and clarity. Poor interface designs or overlays will confuse a user, in such cases much simpler interfaces do. Rather, the developer constructs intuitive controls, accessible onboarding tips, visual cues that guide persons through seamless AR interactions.

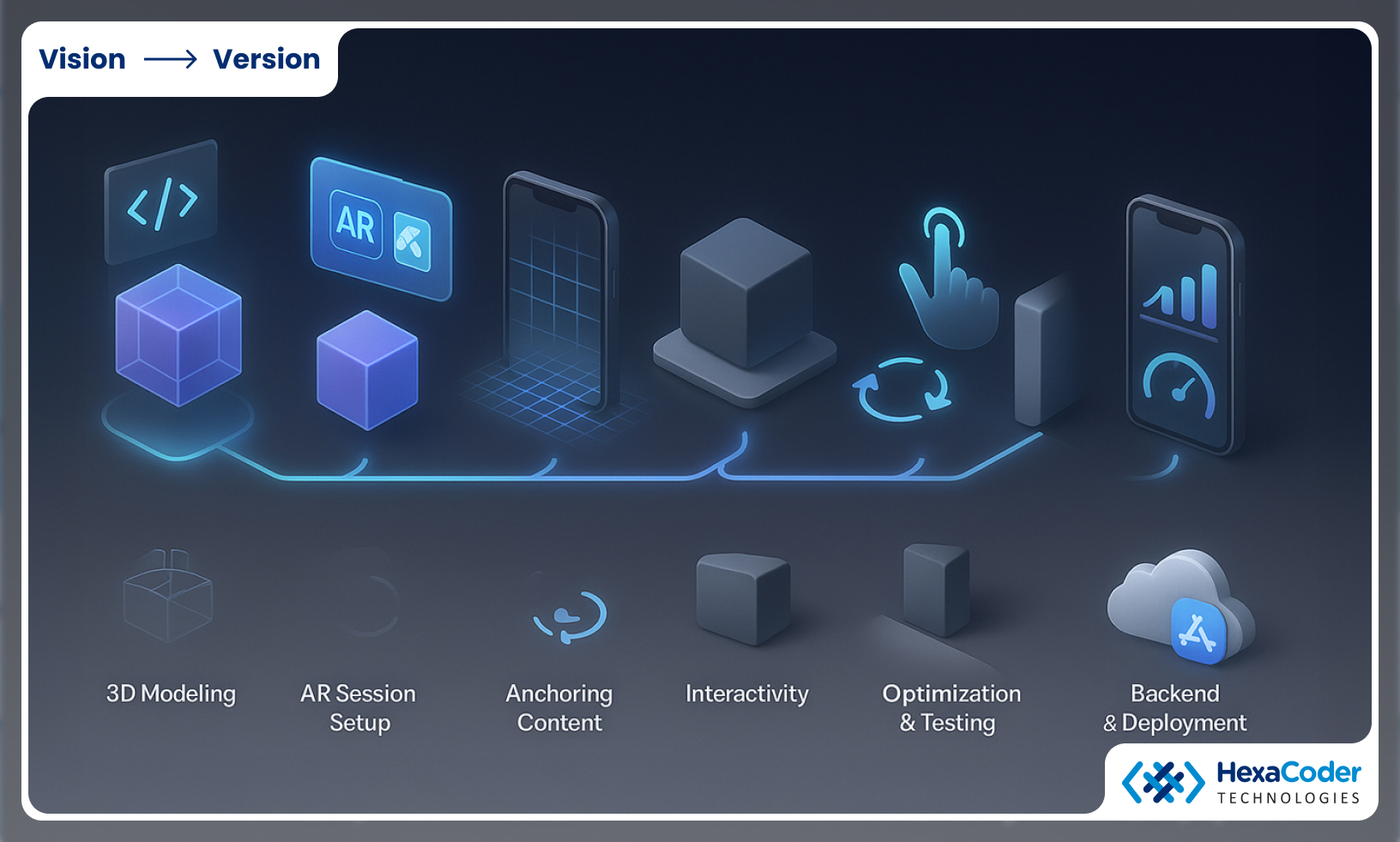

How to Build an AR iOS App: High-Level Workflow

Here’s a simplified pipeline:

Choose the AR Framework:

The first decision will be which AR framework to choose to use for iOS. ARKit provides the options of RealityKit, SceneKit, or Metal: the capabilities, performance, or flexibility that comes with your choice will have a direct impact on features such as motion tracking, plane detection, improved rendering, and physical interactions; all of which will lay the groundwork for your AR application.

Model 3D Content:

Modeling and getting your 3D content ready will be the construction of the objects, textures, and animations. Effective modeling optimizes polygons so there is smooth performance and realistic visualization in AR. Organizing your content also allows for ease of integration, scale, and interacting with in order the deepen the immersive experience without taxing the resources of the device and compromising rendering quality.

Set Up AR Session:

You will then be setting up an AR session of tracking, plane detection, and lighting conditions. This is how the app can understand the real-world environment, so it will always need to provide a reliable anchor, alignment, and performance under different lighting and movement conditions of the device to provide trusted AR experiences for the users in the real world.

Anchor Virtual Content:

The 3D objects you add will be anchored to the real-world detected surface area respectively, therefore you will see the virtual content still referenced to the physical environment and the virtual objects can appear realistic and relevant to the reality as real to the user. This behavior, if anchored correctly will eliminate the drifting or floating cursor behavior, therefore always being a functioning contextually relevant augmentation, remaining immersive, etc.

Add Interactivity:

Add interactivity for the user, touch gestures, and animations, and event triggers for user interactivity etc. This way, interactivity allows a user to move and scale and rotate and or animate the objects. The app used 'cool' well thought-out interactivity to provide an intuitive, low friction controls and also allow the objects to respond to the user and it all lead up to an overall satisfaction and engagement time in the app.

Handling Occlusion, Shadows, and Depth:

When trying to create a realistic interaction of two virtual objects to the physical world, occlusion, shadows, and depth perception must be implemented, naturally. A realistic immersion adds realism. This is a key principle for realism and for any floating and/or incorrect overlapping of the objects. These elements are consequential to present believable AR visuals, especially for criterion such as more complex AR environments, duty environments, and crowded environments.

Optimizing rendering and Performance:

Adjustments on 3D assets, shaders, and AR algorithms can ensure performance behavior remains smooth, avoiding accessibility issues such as frame drops, overheating, and battery. Rendering efficiency will lead to steady state read results of tracking, frame rates, and the claim to be business-related or polished especially for AR visuals.

Testing Across Devices and Lighting Conditions:

Sufficient app performance can be determined by testing the app across multiple iOS devices under multiple lighting conditions over different locations. Several issues from testing might think tracking errors, occlusion errors, and misaligned textures. Therefore, effective QA testing during the development process can later verify that all performances will be consistent across all iOS devices as well as across multiple real-world environments for user experience.

Connect with a Backend:

You can utilize a backend integration that can enable the AR attributes to be connected with systems beneath, analytics, content management, and delivering assets. Allowing for backend integration means the AR could be updated dynamically, track usage, and introduce new features to enhance the experience, backing your plan to have a scaled rollout, with tailored experiences and continuous monitoring to allow for improvements forged by data and to future proof features within the AR app.

Deploy, Maintain, and Iterate:

You can then deploy the AR app into the App Store, with continuous monitoring of performance. Routine updates not only would prevent bug fixes, but also provide compatibility upgrades, or new features. Iterations are changes that are implemented based on user feedback, or metrics gathered by backends, that the AR experience is worth repeating, it remains currently relevant and aimed at improvements in user experience's of AR.

HexaCoder's Role:

HexaCoder offers end-to-end AR development service for iOS covering 3D modeling, session setup, a backend connective and integration to optimize the performance and finally deploy the app. HexaCoder guarantees a high performance AR application that meets the goals of the business authenticating user engagement and experiences in delivering trusted experiences and scalable experiences for users on iOS.

HexaCoder as developer can manage everything from, modeling, AR session setup, integration, optimization of performance, to app release.

Here are examples of AR applications built using ARKit, and some success stories.

IKEA Place: this allows users to virtually place different pieces of furniture in different locations to see what might fit. (WIRED)

Measure (iOS built): uses ARKit to turn your iPhone into a measurement tool.

Many retail brands use AR Quick Look - 'In AR' button typically appears on the webpage for any product that allows you to view that model in your context or space.

Real world applications proving this right are reducing ambiguity, enhancing confidence, and improving conversions.

Future Trends & What Comes Next

Hardware software figures out future exciting frontiers for AR iOS:

- AR Headsets/Glasses Integration- i.e. the future devices of Apple extend AR beyond mere handheld devices.

- Persistent AR Anchors- specific AR content associated with real-world location across different sessions.

- Collaborative AR- synchronizing a number of users to interact with such AR scenery at the same point time (shared AR).

- Better Occlusion & Depth- bringing more naturalistic effect between real objects and blended AR content.

- AI + AR- through computer vision, detect objects, gestures, or context to serve as triggers for AR behavior.

- Cross-Platform AR - experience seamlessly extending from iOS to web to AR and VR glasses.

Those brands who embraced AR will be ready for next-generation immersive applications.

Summary & Takeaways

With ARKit and RealityKit powering AR on iOS, it really is up there among the most advanced software platforms for blending the worlds of the virtual with the real.

Core capabilities include motion tracking, plane detection, depth sensing, and rendering integration.

ARKit 6 also brings some additional features: 4K video capture, more enhanced depth APIs, motion capture, and instant placement using LiDAR.

Use cases for AR span almost every subject matter-accessing retail, gaming, education, industrial, and navigational areas.

Some of the challenges AR has to face would be in terms of device performance, device variability, realism, privacy, and user experience design.

Creating the successful AR application is a case of detailed planning, optimizing, and testing.

The future promises much more in common experience, intelligent AR, and immersive hardware delivery.

If your brand is looking to incorporate AR into the iPhone HexaCoder can be your one-stop partner and do it all: from 3D modeling to AR applications development all the way through performance tuning and deployment across iOS devices. Let’s build AR experiences that truly excite users and set your brand apart!